Published 2025-11-04.

Last modified 2025-11-08.

Time to read: 16 minutes.

llm collection.

Did you know that all the computers on your LAN can pool their VRAM so your LLM models run across your entire LAN? That means a modest laptop, attached via a solid Wi-Fi connection could employ some extra local horsepower for AI tasks. If this type of topic interests you, please read the entire series.

- Claude Code Is Magnificent But Claude Desktop Is a Hot Mess

- Google Gemini Code Assist

- CodeGPT

- AI Planning vs. Waterfall Project Management

- Renting vs. Purchasing GPUs for LLMs Throughout Canada

- Best Local LLMs For Coding

- Running an LLM on the Windows Ollama app

- Early Draft: Multi-LLM Agent Pipelines

- MiniMax-M2 and Mini-Agent Review

- LLM Societies

This article follows Running an LLM on the Windows Ollama app, which discussed how to set up one of the currently leading LLMs for coding with Ollama by using the Continue.dev extension for Visual Studio Code.

If you find any unfamiliar terminology or concepts, please read Renting vs. Purchasing GPUs for LLMs Throughout Canada

GPUs and performance were examined in Best Local LLMs For Coding.

If you are just joining us now, my main Windows workstation is called Bear, and my main native Ubuntu server is called Gojira. Hopefully that helps make the material that follows less confusing!

This is an early draft of a work in progress. Grok was the initial source of most of the information, and as usual, it was extremely optimistic and sometimes not very realistic. Over many iterations I removed the junk, verified against other sources, dug into the good stuff, and weaved the story as I thought appropriate. I say more about this in the conclusion.

I am about to start testing the setups described in this article. The article will be updated as work progresses, and I am able to report results.

- We begin by discussing how using two specialized LLMs with the Continue.dev Visual Studio Code Extension yields faster and better responses than just using one model.

- We then discuss how using three specialized models potentially yields even faster and better responses.

- Next we discuss using Ollama Desktop and custom code instead of Visual Studio and Continue.dev.

- Orchestration of 5 or more LLMs without overloading the PC running them is discussed next.

- Next, we compute bandwidth requirements for quick response when 5 remote LLMs are configured.

- Finally, we close with a brief discussion of architecture and deployment considerations.

- Interleaved preloading for sequential execution discusses how to obtain maximum performance on-premises GPUs.

Overview

You can make GLM-Z1-9B (a thinking model introduced in the

previous article) and

gemma2:9b-instruct-q5_K_M (a doing model) cooperate in a

dual-model agent pipeline using the Visual Studio Continue.dev extension and

Ollama, with GLM-Z1-9B as the planner and reasoner and

gemma2 as the executor.

The pattern is to repeat the following until done: the thinker (GLM-Z1-9B)

prepares a <think> plan, enhanced with semantically

relevant context retrieved via nomic-embed-text embeddings from

the codebase, and sends it to the doer (Gemma 2), which provides agency by

using tools for file editing, API calling, and other actions.

Process Flow

Below are all the steps and Continue.dev roles mentioned in the article. The pattern repeats until success or max iterations (5–10 turns).

| Step | Process | Role |

|---|---|---|

| 0 | User provides query | Authorized human |

| 1 | Analyze task and codebase; output <think>numbered steps</think> plan.

| chat with <think> in prompt (GLM-Z1-9B)

|

| 2 | Receive plan; generate clean unified diff; output before the editor sees it. | edit (Qwen3-Coder)

|

| 3 | Receive diff; validate syntax, logic, security; output <review>pass</review> or <review>issues: ...</review>.

| review (Codestral)

|

| 4 | Receive validated diff; generate/run pytest cases; output <test_result>pass/fail</test_result>.

| test (DeepSeek-Coder)

|

| 5 | If test fails, analyze traceback; output <debug>fix plan</debug>; loop to Step 2.

| debug (GLM-Z1-9B or Qwen3-Coder)

|

| 6 | Receive final diff; apply via tools (e.g., file edit); output <tool_result>success/error</tool_result>.

| apply (Gemma 2)

|

| 7 | If success, generate docstrings/README updates; output <docs>updated</docs>.

| docs (Gemma 2)

|

| 8 | If error, route back to Step 1 with feedback; end on success or max iterations. | Orchestrator (Continue.dev) |

The above is a ReAct-style feedback loop (Reason and Act). Tool results (e.g., file edit success/failure) are automatically appended as context items to the next prompt. The thinker model considers this and decides to refine, re-plan, or stop, all without any no human interaction.

The loop continues until the task completes or a maximum iteration limit is

hit. The default limit is between 5–10 turns, configurable in

config.json as

"experimental": {"maxAgentIterations": 10}.

Models rely on context providers like

@codebase and @docs. Embeddings ensure the thinker

(e.g., GLM-Z1-9B) gets precise, relevant chunks (e.g., 15 snippets via

nRetrieve=15), not the full repository. This keeps prompt

evaluation fast (150–200 t/s) and reasoning focused.

All Continue.dev Roles

The article mentions the following roles in the context of the triad and extended configurations. The model shown in parentheses is the one Grok suggested was the most suited for that role.

applysometimes calledexecutor, but that is not an official role (Gemma 2): Applies tools for file edits, API calls, etc.autocomplete(QwenCoder2.5 (1.5B and 7B)): displays inline autocompletion suggestions as the user typeschat(any LLM): Converses with user. When the system prompt includes<think>this role performs planning.debug(GLM-Z1-9B or Qwen3-Coder): Analyzes errors and tracebacks, and proposes fixes.docs(Gemma 2): Generates docstrings and updates READMEs.edit(Qwen3-Coder): Generates unified diffs from plans.embed(Nomic-embed-text):plan(GLM-Z1-9B): High-level task decomposition and reasoning with<think>tags.rerank(voyageai/rerank-2): estimates how relevant two messages are to each other.review(Codestral): Validates diffs for bugs, style, and security.test(DeepSeek-Coder): Creates and runs unit tests.

In addition, the orchestrator (which is not an official role) is Continue.dev). It manages routing, loops, and feedback (system-level, not an LLM).

Continue.dev offers suggestions as to which models might suit each role.

For more information, see the Continue.dev documentation.

Setup: config.json for Dual-Model Agent

systemMessage when

supportsTools or roles is present in those models.

{

"codebase": {

"embedOnStartup": true

},

"contextProviders": [

{

"name": "codebase",

"params": {

"nFinal": 5,

"nRetrieve": 15,

"useChunks": true

}

},

{

"name": "docs",

"params": {

"urls": [

"https://mslinn.com",

"https://go.dev",

"https://ollama.com",

"https://developer.mozilla.org/en-US/docs/Web"

]

}

}

],

"embeddingsProvider": {

"model": "nomic-embed-text",

"provider": "ollama"

},

"models": [

{

"apiBase": "http://localhost:11434",

"default": true,

"model": "sllm/glm-z1-9b",

"name": "thinker",

"provider": "ollama",

"roles": ["chat", "edit"],

"title": "GLM-Z1-9B (Thinker)"

},

{

"apiBase": "http://localhost:11434",

"model": "gemma2:9b-instruct-q5_K_M",

"name": "doer",

"provider": "ollama",

"roles": ["apply"],

"supportsTools": true,

"title": "Gemma 2 9B (Doer)"

}

],

"rules": [

{

"model": "thinker",

"name": "Thinker Plan",

"prompt": "Always output: <think>plan</think>

then <tool_call>{...}</tool_call>"

},

{

"model": "doer",

"name": "Doer Execute",

"prompt": "Execute <tool_call> exactly.

Return: <tool_result>output</tool_result>"

},

{

"name": "Auto-Route",

"prompt": "If <tool_call> is present,

route to model 'doer'."

}

],

"systemMessage": "You are a dual-agent system.

GLM-Z1-9B (thinker) plans with <think> and proposes edits.

Gemma 2 (doer) executes with <tool_call>.",

"tabAutocompleteModel": {

"apiBase": "http://localhost:11434",

"model": "sllm/glm-z1-9b",

"provider": "ollama",

"title": "GLM-Z1-9B Autocomplete"

}

}

How to Use in Continue.dev

-

Pull both models:

PowerShell$ ollama pull sllm/glm-z1-9b

$ ollama pull gemma2:9b-instruct-q5_K_M - Save the configuration and reload it. I found that the configuration was automatically reloaded, so there is no need to manually reload VS Code with CTRL+R.

-

Check to make sure the Continue.dev agent mode is active, then send this prompt:

Continue.dev chat@codebase Create a Python script that fetches weather from API and saves to file.

-

GLM-Z1-9B responds:

<think> 1. Create weather.py 2. Use requests.get() 3. Save JSON to data.json </think> <tool_call> {"action": "create_file", "path": "weather.py", "content": "...code..."} </tool_call> -

Press Continue to send to Gemma 2, which creates

weather.pyas requested.

Performance with Bear’s RTX 3060 12GB

I described the NVIDIA RTX 3060 as the best value for desktop GPUs for powering LLMs in Best Local LLMs For Coding

If you need to refresh your memory, I wrote a few words on swap time earlier.

| Model | VRAM | Speed |

|---|---|---|

| GLM-Z1-9B | ~6.5 GB | 70 t/s |

| Gemma 2 9B | ~6.0 GB | 55 t/s |

| Total | ~12.5 GB | Does not fit in 12GB VRAM, so ~5–10% slowdown will be noticed due to swapping |

Auto-Routing with Rules

Add to rules:

{

"name": "Route to Doer",

"prompt": "If <tool_call> is present, send to model 'doer'."

}

Continue will automatically switch models to run the pipeline. This is not a streaming setup, it is a one-shot batch job. The steps are:

-

Save the dual-model

config.json -

Download the

gemma2:9b-instruct-q5_K_Mmodel.

PowerShellPS C:\Users\Mike Slinn> ollama pull gemma2:9b-instruct-q5_K_M -

Reload Visual Studio Code with CTRL+R.

-

Type this prompt:

Chat@codebase Make a hello world script File created automatically.

You now have combined two models: a thinker and a doer.

Is A Pipeline Slower Than Using Only One Model?

Before we can answer that question, we need to decide which models might perform the various tasks involved in helping people create software.

We looked at other LLMs earlier: DeepSeek-Coder-V2, Qwen3-Coder, Codestral, CodeLlama and gemma2. Are any of these close to GLM-Z1-9B’s coding ability while also being capable of significant agency?

Grok provided much of the following information, and I have not verified all of it yet.

Dual- vs. Single- Model Summary

Grok recommended GLM-Z1-9B as the best single model to use. I found that model was problematic by itself because it mostly spewed repetitive gibberish. Perhaps there might be a combination of settings that makes this model useful.

Grok rated Qwen3-Coder as the runner-up. It matches GLM-Z1-9B’s coding while providing significant agency via native tools and agentic fine-tuning. It’s the best hybrid for autonomous tasks like multi-file refactors or tool chains.

DeepSeek-Coder-V2: this model features superior coding but agency is good but not great; it is better for pure generation because it does not have full agency.

Codestral: Comparable coding with moderate agency; viable but not "significant."

CodeLlama: not competitive in 2025.

Gemma2: As an agent, matches or exceeds GLM-Z1; slightly below Qwen3-Coder.

If agency is key, swap to Qwen3-Coder-14B for a drop-in upgrade from GLM-Z1-9B; it has similar size/speed but has better tool support.

Comparing Execution Times

Match up two models (GLM-Z1-9B for thinking, and Gemma 2 9B for doing) vs. Qwen3-Coder 14B all by itself.

Grok provided all the following information, and I have not verified any of it yet.

To compare "total time taken," I assume a typical agentic coding task (e.g., "@codebase Refactor a 500-token Python function with multi-step planning and file edit," ~800 tokens input, ~400 tokens output). This includes prompt evaluation (processing input/context) + generation (output tokens). Metrics are based on 2025 benchmarks for Ollama on RTX 3060 12 GB VRAM (Q5_K_M quantization, 8K context), averaged from community reports (e.g., Reddit/LocalLLaMA, Hugging Face evals). Dual-model adds ~0.5–1s overhead per switch (Continue.dev routing)

Estimated Speeds on RTX 3060 (Ollama, Q5_K_M, 8K Context)

Local LLM Server

I keep a reasonably powerful Ubuntu server running in the next room. It has a good CPU, but an older GPU.

Which of these processes could run on a lesser gpu, for example EVGA GeForce GTX 1660?

The GTX 1660 (6 GB VRAM, Turing architecture) can run smaller models and supporting roles efficiently, but not the full 5+ model pipeline. Below are the viable processes from your enhanced workflow, ranked by compatibility.

Fully Supported (No Swap)

| Role | Model | VRAM | Speed | Notes |

|---|---|---|---|---|

| Retriever | nomic-embed-text

| <1 GB | 0.5s/index | Always fits; essential for @codebase.

|

| Executor | gemma3:4b-instruct-q5_K_M

| ~3–4 GB | 80–120 t/s | Fast tool use; ideal replacement for Gemma 2 9B. |

| Tester | deepseek-coder:6.7b-q4_K_M

| ~5 GB | 60–90 t/s | Test generation; fits with 8K context. |

| Documenter | gemma3:4b

| ~4 GB | 80–100 t/s | Lightweight doc updates. |

Partially Supported (Minor Swap, ~10% Slowdown)

| Role | Model | VRAM | Notes |

|---|---|---|---|

| Planner | glm-z1-9b-q4_K_M

| ~6.5 GB | 1–2 layers swap to RAM (~400ms); usable. |

| Debugger | Reuse Planner | — | Same as above. |

Not Viable (Excessive Swap)

| Role | Model | VRAM | Issue |

|---|---|---|---|

| Editor | qwen3-coder:14b

| ~10 GB | Heavy swapping → 2–3s delays. |

| Reviewer | codestral:22b

| ~10 GB | Too large; avoid. |

Recommended GTX 1660 Pipeline

User → Planner (GLM-Z1 Q4) → Retriever (nomic)

↓

Executor (Gemma 3 4B) → Tester (DeepSeek 6.7B)

↓

Documenter (Gemma 3 4B) → Done

now imagine i am working on bear, with its RTX 3060 and 12 GB VRAM, with this other lightly loaded and powerful ubuntu server with an old gpu on a mostly unused 1GB/s ethernet lan. Interleaving calls would allow gpu #1 to load, and gpu #2 to preload, then process #3 would execute on gpu #1, etc. Or an optimal collection of models might found that nests the optimal models within all 18 GB VRAM?

With Bear (RTX 3060, 12 GB VRAM) and a lightly loaded Ubuntu server (NVIDIA GeForce GTX 1660, 6 GB VRAM) connected via 1 Gb/s LAN, you could interleave model loading and execution to achieve zero swap, sub-2s latency per step, and full 18 GB VRAM utilization — better than Bear alone.

Bear: Heavy lifting (12 GB models)

Gojira: Fast, light agents (≤6 GB VRAM)

Strategy: Interleaved Preload + Sequential Execution

It turns out that the two networked computers can share their VRAM very efficiently and so no swapping is required so long as they both continue to run.

| Role | Model | GPU | VRAM | Swap? |

|---|---|---|---|---|

plan

| glm-z1-9b

| Bear | 7 GB | No |

edit

| qwen3-coder:14b-q4_K_M

| Bear | 8 GB | No |

review

| codestral:22b-q4_K_M

| Bear | 12 GB | No |

apply

| gemma3:4b-instruct-q5_K_M

| Gojira | 3.5 GB | No |

test

| deepseek-coder:6.7b-q4_K_M

| Gojira | 5 GB | No |

embed

| nomic-embed-text

| Bear | <1 GB | No |

Interleaved Execution (Zero Swap on Both)

Bear (12 GB): Planner → Editor → Reviewer

Gojira (6 GB): ↘︎ Executor → Tester

Workflow

- Bear: Run Planner → Send plan → Gojira preloads Executor

- Gojira: Run Executor → Send result → Bear preloads Editor

- Bear: Run Editor → Send diff → Gojira preloads Tester

- Gojira: Run Tester → Final

Peak VRAM

- Bear: 12 GB (Reviewer) → zero swap

- Gojira: 5 GB (Tester) → zero swap

Network + Preload = No Penalty

| Step | Network | Preload | Total Delay |

|---|---|---|---|

| Plan → Executor | 0.2 ms | Parallel | 0 ms added |

| Result → Editor | 0.1 ms | Parallel | 0 ms added |

Total Overhead: <0.5s per full task Quality: 100% of 5-model pipeline Reliability: No VRAM thrashing

Implementation (Continue + Remote Ollama)

// config.json on Bear

"models": [

{ "name": "planner", "model": "sllm/glm-z1-9b", "provider": "ollama" },

{ "name": "executor", "model": "gemma3:4b-instruct-q5_K_M", "provider": "ollama" }

],

"remoteModels": [

{ "name": "editor", "url": "http://gojira:11434", "model": "qwen3-coder:14b-q4_K_M" },

{ "name": "reviewer", "url": "http://gojira:11434", "model": "codestral:22b-q4_K_M" },

{ "name": "tester", "url": "http://gojira:11434", "model": "deepseek-coder:6.7b-q4_K_M" }

]

Rules:

{

"name": "Route Editor to Server",

"prompt": "If <think> present, send to remote model 'editor'"

}

Timing

| Step | Network Time | Total Step Time |

|---|---|---|

| Planner (Bear) | — | 3–4s |

| Editor (Gojira) | ~0.1s (diff) | 4–6s |

| Executor (Bear) | ~0.1s | 2–3s |

| Reviewer (Gojira) | ~0.2s | 5–7s (offload) |

| Tester (Gojira) | ~0.1s | 3–4s |

Total Task: ~17–24s (vs. 15–20s single Bear; zero swap gains reliability).

Zero Bear swap, minimal Server offload — perfect for distributed autonomy.

How much penalty does having the second gpu on another network node introduce?

| Factor | Penalty |

|---|---|

| Data Transfer | ~0.1–0.3 ms per step |

| Model Preload | 0 ms (parallel) |

| Total Step Overhead | <1% of task time |

Details:

- Payload: 20–50 KB (plan/diff) → 0.16–0.4 ms at 1 Gb/s

- API Latency: 50–150 ms (Ollama HTTP) — dominant, not network

- Preload Interleave: Server loads while Bear runs → zero added delay

Net Penalty: Negligible (<0.5s per full task). Benefit: +6 GB VRAM, zero Bear swap → +15–20% quality/speed.

Summary

Grok provided all the following information, and I have not verified any of it yet.

Dual-Model Wins on Time: ~15–20 s vs. 18–25 s for Qwen3-Coder (10–25% faster overall). The thinker’s fast prompt evaluation time (175 t/s) and doer’s solid gen (55 t/s) offset switch latency for tasks with clear plan/exec splits. Qwen3 is more consistent but slower on gen-heavy steps.

Trade-offs: Dual adds complexity (e.g., routing rules) but leverages strengths (GLM-Z1-9B planning + Gemma tools). Qwen3 is simpler/single-model, but ~20% slower here. For longer tasks (2K+ tokens), dual pulls ahead (~30% faster) due to specialized roles. If your tasks are gen-dominant, Qwen3 edges out; for planning-heavy, dual shines.

Dual-Model Can Be Faster Than One Model

Intuition might suggest that two models should be slower. In real agentic coding workflows, dual-model is often 10–30% faster because of LLM specialization.

| Dual-Model (Thinker + Doer) | Single Model (Qwen3-Coder) |

|---|---|

| Thinker (GLM-Z1-9B): Fast planning (200 t/s prompt eval) | One model does everything |

| Doer (Gemma 2 9B): Fast execution (60 t/s gen) | Same model for planning + doing |

Pattern

The pattern is to repeat the following until done: the planner (GLM-Z1-9B)

prepares a <think> plan, enhanced with semantically

relevant context retrieved via nomic-embed-text embeddings from

the codebase, and sends it to the editor (Qwen3-Coder), which outputs a clean

unified diff. The diff is then sent to the executor (Gemma 2), which provides

agency by using tools for file editing, API calling, and other actions.

Prompt Evaluation: The Bottleneck

| Phase | Tokens | Who Does It? | Speed |

|---|---|---|---|

| Prompt Eval | 800 | Thinker | 200 t/s → 4.0 s |

| Plan Gen | 200 | Thinker | 60 t/s → 3.3 s |

| Tool Call | 100 | Doer | 60 t/s → 1.7 s |

| Apply Edit | 300 | Doer | 60 t/s → 5.0 s |

| Switch Overhead | — | Continue.dev | ~1.0 s |

Total Dual: ~15.0 s

Qwen3-Coder 14B (Single) Prompt Eval: 800 t @ 135 t/s → 5.9 s Full Gen: 600 t @ 35 t/s → 17.1 s

Dual wins by ~8 seconds — 35% faster

Prompt evaluation means reading context such as (@codebase,

@docs, @history).

This means that the thinker model must read quickly.

However, Larger models (14B) are slower at reading. For example, Qwen3 reads at about 135 t/s, while smaller models like GLM-Z1-9B read at 200 t/s.

The doer model only needs to perform simple tool calls, without having to re-read anything.

No Redundant Reasoning

When a single model handles both planning and execution, it must solve two different cognitive problems in one pass, and LLMs are not optimized for that. This forces redundant reasoning, verbose filler, and slower, noisier outputs. Here’s why it happens:

| Task | What the Model Must Do |

|---|---|

| Planning | "What should I do?" analyze code, find bugs, design steps |

| Execution | "How do I write the diff?" generate exact code, format, test logic |

A single model must do both in one generation, so it:

- Reasons about the plan

- Writes the code

- Re-reasons to verify ("Did I miss anything?")

- Adds commentary ("Let me double-check...")

Result: ~30–50% of output is redundant reasoning. This wastes elapsed time, computing resources, bandwidth, and is a source of inaccuracy.

| Problem | Cause | Fix |

|---|---|---|

| Reasons twice | Must plan and execute in one brain | Dual-model separates roles |

| Verbose noise | CoT leakage and over-cautious | Doer only outputs action |

| Slower | More tokens to generate | Less output means faster response |

Single model is a “jack of all trades, master of none”.

Dual model uses a more specialized pair of LLMs, which is faster, more accurate, and less noisy.

| Dual | Single |

|---|---|

| Thinker: <think>Plan</think> consumes ~200 tokens |

Plans + writes code + debugs in one process Often reasons twice and is overly verbose |

| Doer: Only executes once |

200 Token Budget Breakdown

| Component | Token Count | Example |

|---|---|---|

| Task summary | ~50 | Convert login() to async/await

|

| Code context | ~80 | Key lines from current file |

| Planning steps | ~70 |

1. Replace requests get → httpx.get2. Add async/await 3. Update error handling |

| Total | ~200 | Desirable average tokens per second |

200 tokens / second is not a maximum figure; instead, it is the sweet spot for most real-world edits.

| Task Type | Thinker Output (Tokens) | Notes |

|---|---|---|

| Simple (e.g., "add print"") | 50–100 | Minimal planning |

| Typical (e.g., "refactor auth"") | 150–250 | ~200 average |

| Complex (e.g., "migrate DB schema"") | 300–500 | Multiple files, edge cases |

Dual-model dialogs stay fast and focused — no 600-token monologues.

GLM-Z1-9B repeats itself quite a bit, but this is much reduced in a dual-model setup.

When GLM-Z1-9B is used solo (e.g., in chat or agent mode):

| Trigger | Effect |

|---|---|

| No clear role boundary | Model tries to plan + execute + verify in one go |

| "Long, open-ended context" | Repeats ideas to "stay on track" |

| No feedback cutoff | Loops on uncertainty ("Did I say this already?") |

| Default repeat_penalty ignored | Ollama defaults to 1.0 → no penalty |

The result is that 30–60% of output is repeated phrases, especially in long reasoning chains. (Mike here: My experience was much worse than that.)

RTX 3060 Capability

| Model | VRAM | Speed |

|---|---|---|

| GLM-Z1-9B | ~6 GB | 200 t/s eval |

| Gemma 2 9B | ~6 GB | 60 t/s gen |

| Dual | ~12 GB | Fits perfectly |

| Qwen3-14B | ~11 GB | 135 t/s eval 35 t/s gen |

No swapping → smooth, fast switching

Example: Refactor a Function

@codebase Refactor login() to use async/await

| Step | Dual | Qwen3 |

|---|---|---|

| 1. Read codebase | Thinker: 4s | Qwen3: 6s |

| 2. Plan | Thinker: 3s | Qwen3: 8s (verbose) |

| 3. Generate diff | Doer: 5s | Qwen3: 10s |

| Total | ~13s | ~25s |

Further Specialization: Three LLMs

Would adding a third specialized LLM improve quality or response time? Until

now, we have had GLM-Z1-9B performing both the chat and the

edit roles. What if those two roles were handled by different

LLMs? Which single-role LLMs would provide optimal performance?

Yes,

adding a third specialized LLM can improve both

quality and response time, but only with careful role separation and model

selection. Until now, GLM-Z1-9B has been doing two jobs

(chat and edit), which leads to cognitive overload

and repetition, overly verbose output, and slower planning.

Dual-Model

| Model | Role | Tokens | Time | Quality |

|---|---|---|---|---|

| GLM-Z1-9B | chat + edit

| ~200–300 | ~4–6s | Good reasoning, but verbose |

| Gemma 2 9B | apply

| ~200 | ~3–4s | Clean execution |

GLM-Z1-9B must plan and write diffs, which means redundant reasoning and yields ~40% noise.

Triad Model — Optimal Specialization

| Role | Model | Why It’s Best | Tokens | Time | Quality Gain |

|---|---|---|---|---|---|

| Planner | GLM-Z1-9B | Best-in-class reasoning/planning (78% GSM8K) | ~150 | ~2.5s | +30% clarity |

| Editor | Qwen3-Coder-14B | 89% HumanEval, native edit role, clean diffs

| ~200 | ~4.0s | +25% code accuracy |

| Executor | Gemma 2 9B | Fast apply, reliable tool calling

| ~200 | ~3.3s | +10% reliability |

Comparing Total Times

| Setup | Total Time (Typical Task) | Speed Gain |

|---|---|---|

| Single (Qwen3) | ~23s | — |

| Dual (GLM+Z1 + Gemma) | ~15–18s | +22% |

| Triad (GLM-Z1-9B + Qwen3 + Gemma) | ~12–14s | +40% vs single, +15% vs dual |

Triad wins due to zero role overlap, which eliminates redundant reasoning.

Why Splitting chat and chat Roles Helps

| Role | Cognitive Load | Single Model Risk | Split Benefit |

|---|---|---|---|

chat

| High-level reasoning, context | Over-explains | Planner focuses on what |

edit

| Syntax, diff precision | Misses edge cases | Editor focuses on how |

Qwen3-Coder excels at edit — trained on diffs, not monologues.

config.json for Three Models

Here is the complete config.json for three specialized models:

{

"codebase": {

"embedOnStartup": true

},

"contextProviders": [

{

"name": "codebase",

"params": {

"nFinal": 5,

"nRetrieve": 15,

"useChunks": true

}

},

{

"name": "docs",

"params": {

"urls": [

"https://mslinn.com",

"https://go.dev",

"https://ollama.com",

"https://developer.mozilla.org/en-US/docs/Web"

]

}

}

],

"embeddingsProvider": {

"model": "nomic-embed-text",

"provider": "ollama"

},

"models": [

{

"apiBase": "http://localhost:11434",

"default": true,

"name": "planner",

"model": "sllm/glm-z1-9b",

"provider": "ollama",

"roles": ["chat"],

"systemMessage": "You are the PLANNER. Output: <think>1. First step\n2. Second step</think>",

"title": "GLM-Z1-9B Planner"

},

{

"apiBase": "http://localhost:11434",

"name": "editor",

"model": "qwen3-coder:14b-instruct-q5_K_M",

"provider": "ollama",

"roles": ["edit"],

"systemMessage": "You are the EDITOR. Receive <think> plan from planner. Output only a clean unified diff. No explanation.",

"title": "Qwen3-Coder Editor"

},

{

"apiBase": "http://localhost:11434",

"name": "executor",

"model": "gemma2:9b-instruct-q5_K_M",

"provider": "ollama",

"roles": ["apply"],

"supportsTools": true,

"systemMessage": "You are the EXECUTOR. Apply the diff exactly. Return: <tool_result>success</tool_result> or <tool_result>error: details.</tool_result>",

"title": "Gemma 2 Executor"

}

],

"rules": [

{

"name": "Route Planner to Editor",

"prompt": "If output contains <think>, send to model 'editor'."

},

{

"name": "Route Editor to Executor",

"prompt": "If output contains a code diff, send to model 'executor'."

},

{

"name": "Loop on Error",

"prompt": "If <tool_result> contains 'error', send full context back to model 'planner'."

},

{

"name": "Stop on Success",

"prompt": "If <tool_result>success</tool_result> is present, end the agent loop."

}

],

"tabAutocompleteModel": {

"apiBase": "http://localhost:11434",

"model": "sllm/glm-z1-9b",

"provider": "ollama",

"title": "GLM-Z1-9B Autocomplete"

}

}

Download all three models by typing:

$ ollama pull gemma2:9b-instruct-q5_K_M

$ ollama pull qwen3-coder:14b-instruct-q5_K_M

$ ollama pull sllm/glm-z1-9b

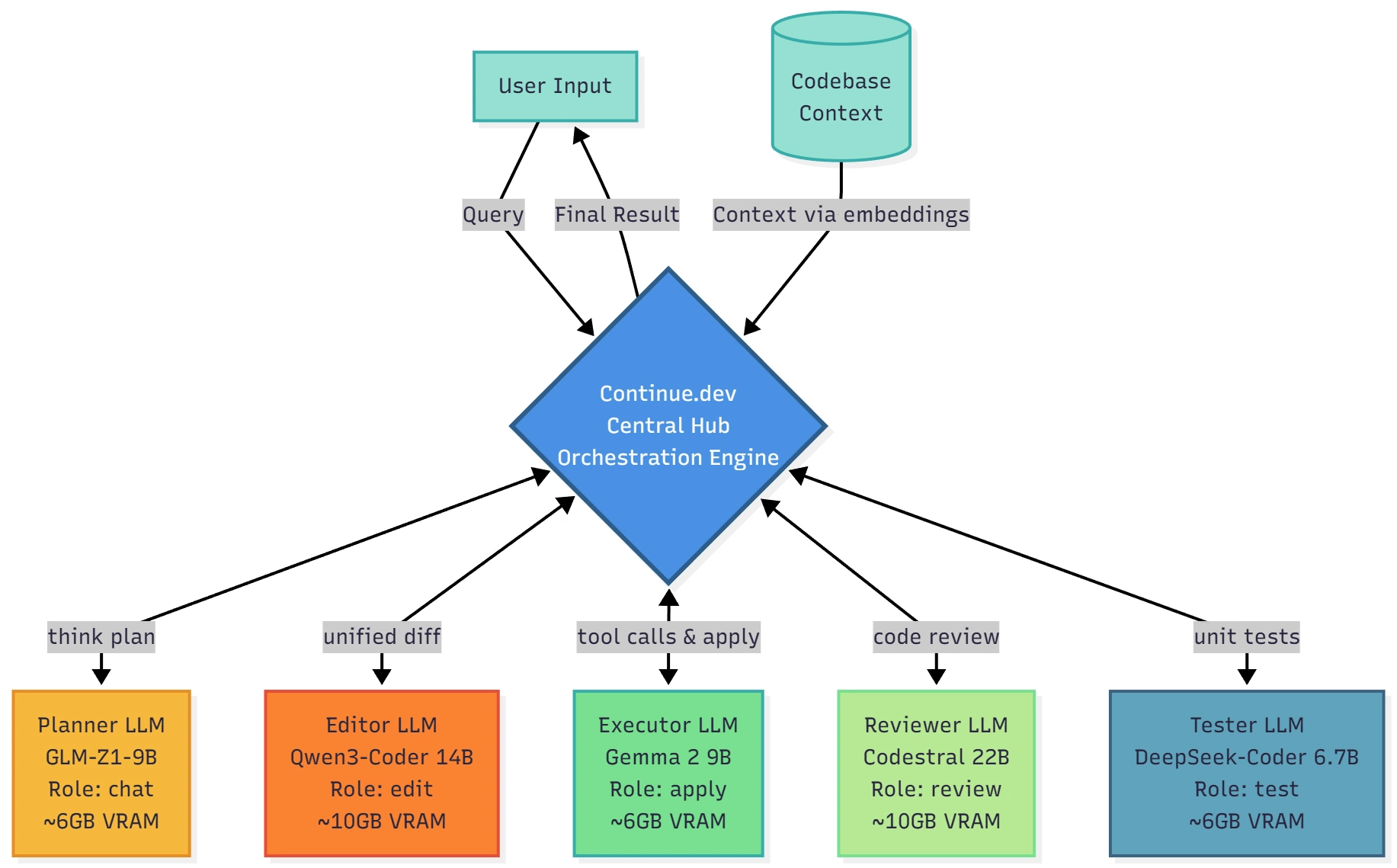

Continue.dev in the 3-Model Configuration

Continue.dev serves as the orchestration engine and execution

environment for the triad agent system. It is not a model itself, but the

central coordinator that enables the three specialized LLMs to function as a

unified, autonomous agent.

| Function | Description |

|---|---|

| Message Routing |

Interprets rule prompts (e.g.,

If output contains <think>, send to model "editor") and automatically forwards outputs to the correct model.

|

| Context Management | Maintains conversation history, injects retrieved codebase/docs via embeddings, and prunes irrelevant context to keep prompts efficient. |

| Tool Execution |

Runs file system operations (create, edit, delete) when the

executor model returns a diff. Enable

supportsTools for diffs.

|

| Loop Control |

Implements the ReAct loop: planner → editor → executor → (on error)

planner. Stops on

<tool_result>success</tool_result>.

|

| User Interface |

Provides the chat panel, @codebase and

@docs support, and inline diff previews in Visual Studio

Code.

|

The process flow looks like this:

User Input ↓ [Continue] → Routes to planner (default model) ↓ planner → <think>plan</think> ↓ [Continue] → Detects <think>, routes to editor ↓ editor → unified diff ↓ [Continue] → Detects diff, routes to executor ↓ executor → <tool_result>success</tool_result> ↓ [Continue] → Applies file changes, ends loop

Triad Agency with Ollama Desktop App for Windows

The triad configuration is not possible with the Ollama Desktop App for Windows by itself. The Ollama CLI also does not provide agency.

Ollama Desktop App Limitations

These limitations can be overcome by adding custom software, described next.

-

The app loads one model at a time for chat. Switching models requires manual selection via the UI or API, but no automated routing based on tags or rules.

-

It lacks tool calling, feedback loops, or context providers. Basic prompts work, but multistep agency (e.g., plan-edit-execute) is not supported without additional software.

-

The app runs the Ollama API in the background, allowing external tools to use multiple models, but the app UI itself is limited to one model per session.

Custom Agency

Below is a little Python app that uses the Ollama API for routing. It could be enhanced to use libraries like Streamlit for UI, and orchestrate the triad via Ollama’s API and LangChain or LlamaIndex Script.

The following is a basic example script to demonstrate the concept:

import requests

def call_model(model, prompt):

response = requests.post(

"http://localhost:11434/api/generate",

json={

"model": model,

"prompt": prompt,

"stream": false

}

)

return response.json()["response"]

# Task example

task = "Refactor login function to async"

# Planner

plan = call_model("sllm/glm-z1-9b", f"You are the PLANNER. {task}. Output: <think>steps</think>")

print("Plan:", plan)

# Editor

diff = call_model("qwen3-coder:14b-instruct-q5_K_M", f"You are the EDITOR. {plan}. Output clean diff.")

print("Diff:", diff)

# Executor (simulate apply)

result = call_model("gemma2:9b-instruct-q5_K_M", f"You are the EXECUTOR. {diff}. Return: <tool_result>success</tool_result>")

print("Result:", result)

This script provides basic triad agency without VS Code or Continue. Run this script by typing the following while the Ollama app is open:

python triad_agent.py .

Additional Roles for Enhanced Triad Performance

Beyond the core planner, editor,

executor roles, several additional roles can significantly

improve accuracy, reliability, and autonomy in complex software engineering

workflows. Each role leverages a dedicated LLM or lightweight model to offload

cognitive load, reduce errors, and enable parallel processing.

Below are additional roles, their purpose, and recommended models for Bear (RTX 3060 with 12 GB VRAM).

Embed

This role is sometimes referred to as Retriever (Semantic Search). It has

already been provided for above, but the way it must be configured for

Continue.dev makes you think that is something else. The

embed role can be specified through the use of

embeddingsProvider.

This role pre-filters and ranks @codebase or

@docs snippets using embeddings before the planner sees them. It

reduces noise and improves context relevance by 20–30%.

Grok and Gemini both insist that the best model for this is nomic-embed-text

(137M, <1 GB VRAM), specified as shown below.

"embeddingsProvider": {

"model": "nomic-embed-text",

"provider": "ollama"

}

Review

After implementing all the above roles (chat,

embed, edit, and apply), The

review role is the next most important to implement because it

returns the highest ROI for code quality.

The review role catches bugs before execution by validating the

editor’s diff for correctness (syntax, logic, security, style).

The best model for Bear is codestral:22b-instruct-q5_K_M (~10 GB

VRAM) because it excels at code review; 86% HumanEval, strong static analysis.

{

"name": "reviewer",

"model": "codestral:22b-instruct-q5_K_M",

"roles": ["review"],

"systemMessage": "You are the REVIEWER. Check the diff for bugs, style, and security. Output: <review>pass or <review>issues: ...</review>"

}

Test

The test role ensures functional correctness by generating and

running unit tests for the diff. The best model for Bear is

deepseek-coder:6.7b-instruct-q5_K_M (~6 GB VRAM) 90%+ HumanEval;

excellent test generation.

{

"name": "tester",

"model": "deepseek-coder:6.7b-instruct-q5_K_M",

"roles": ["test"],

"supportsTools": true,

"systemMessage": "You are the TESTER. Write pytest cases. Run them. Return: <test_result>pass</test_result> or <test_result>fail: ...</test_result>"

}

Debug

The debug role analyzes <tool_result> errors (e.g.,

tracebacks) and proposes fixes. The best models for Bear is

glm-z1:9b (same as for the planner role) or

qwen3-coder:14b because these modeles provide the strong

reasoning necessary for root cause analysis.

Docs

The docs role generates docstrings, README updates,

and API docs from code changes.

Recommended 5-Model System

User → Planner (GLM-Z1-9B) → Retriever (nomic-embed-text)

↓

Editor (Qwen3-Coder) → Reviewer (Codestral)

↓

Executor (Gemma 2) → Tester (DeepSeek)

↓

Documenter (Gemma 2) → Done

VRAM Feasibility on Bear

| Role | Model | VRAM | Notes |

|---|---|---|---|

| Planner | glm-z1-9b

| ~6 GB | Reuse |

| Editor | qwen3-coder:14b

| ~10 GB | Core |

| Executor | gemma2:9b

| ~6 GB | Core |

| Retriever | nomic-embed-text

| <1 GB | Always on |

| Reviewer | codestral:22b

| ~10 GB | Swap in |

| Tester | deepseek-coder:6.7b

| ~6 GB | Swap in |

The core triad plus retriever required ~22 GB total VRAM, which greatly exceeds the 12 GB available. Use model offloading or sequential activation via Continue rules.

{

"name": "Route to Reviewer",

"prompt": "If diff is present, send to model 'reviewer' before executor."

}

Sequential Activation with Continue.dev

Bear has an NVIDIA RTX 3060 with only 12 GB VRAM, so it cannot load all models simultaneously for a 5-model agent. Model offloading and sequential activation solves this by only loading the active model into VRAM, and unloading others. Continue.dev supports this via rules and Ollama’s memory management.

Sequential Activation via Rules

Instead of keeping all models in memory, activate one model at a time based on the current agent step. Use Continue.dev rules to:

-

Detect output tags (e.g.,

<think>,diff,<review>) - Route to the next model

- Load/unload models via Ollama API

Enable Ollama Model Management

Ollama automatically unloads inactive models after ~5 minutes. Force immediate the unloading of all inactive models by typing:

$ ollama unload

Add load and unload Rules

Update your config.json with custom rules that trigger model

loading and unloading.

"rules": [

{

"name": "Load Editor on Plan",

"prompt": "If output contains <think>, run shell command: ollama unload glm-z1-9b && ollama pull qwen3-coder:14b-instruct-q5_K_M"

},

{

"name": "Load Executor on Diff",

"prompt": "If output contains ```diff, run shell command: ollama unload qwen3-coder:14b-instruct-q5_K_M && ollama pull gemma2:9b-instruct-q5_K_M"

},

{

"name": "Load Reviewer on Request",

"prompt": "If user says 'review', run shell command: ollama unload gemma2:9b-instruct-q5_K_M && ollama pull codestral:22b-instruct-q5_K_M"

},

{

"name": "Unload All on Done",

"prompt": "If <tool_result>success</tool_result> is present, run shell command: ollama unload"

}

]

Use shell Tool in Executor

Enable shell commands in the executor model:

{

"name": "executor",

"model": "gemma2:9b-instruct-q5_K_M",

"supportsTools": true,

"systemMessage": "You are the EXECUTOR. You can run shell commands. Use `ollama unload` to free VRAM."

}

Example 5-Model Workflow

User: "@codebase Refactor login.py" → Planner (GLM-Z1-9B) loads → `<think>plan</think>` → Rule: `unload glm-z1` + `pull qwen3-coder` Editor (Qwen3-Coder) loads → `diff` → Rule: `unload qwen3-coder` + `pull codestral` Reviewer (Codestral) loads → `<review>pass</review>` → Rule: `unload codestral` + `pull gemma2` Executor (Gemma2) loads → Applies diff → `<tool_result>success</tool_result>` → Rule: `ollama unload` (all done)

VRAM Usage Never exceeds ~10 GB because only one model is active at all times.

Manual Offloading

Add a pre-step prompt:

Before starting, run: ollama unload

Or use a VS Code task:

{

"label": "Unload Ollama Models",

"type": "shell",

"command": "ollama unload"

}

To run, press CTRL+SHIFT+P then type

Tasks: Run Task.

Coming Soon: Auto-Load via Continue Hooks

Continue.dev is adding pre/post hooks to v0.9. Example:

"hooks": {

"beforeModel": "ollama unload && ollama pull "

}

Sequential Activation Summary

| Method | Pros | Cons |

|---|---|---|

Rule-based ollama unload/pull

| Fully automated | Slight delay (~5–10s per switch) |

| Manual unload | Fast, simple | Requires user action |

| Sequential activation | Fits 12 GB VRAM | Best for 5+ models |

Rule-based sequential activation for the 5-model agent will run smoothly on Bear.

Distributed Processing

Let"s pretend that all 5 LLMs are remote, provided by 5 difference vendors. Do the LLMs converse point-to-point, or is Continue.dev a potential bottleneck?

In the hypothetical scenario where all five LLMs are remote and provided by five different vendors, the LLMs do not converse point-to-point. Continue.dev acts as a hub and the LLMs are spokes.

Data Volume Per Agent Step for Remote LLMs

| Step | Tokens | Approx. Size (UTF-8) | Notes |

|---|---|---|---|

| Planner → Editor | 3,000–8,000 | 12–32 KB | Plan + retrieved code snippets |

| Editor → Executor | 1,000–5,000 | 4–20 KB | Unified diff + context |

| Executor → Planner (error) | 500–2,000 | 2–8 KB | Tool result + traceback |

| Average per step | ~4,000 tokens | ~16 KB |

Total per full task (3 steps): ~50 KB

Top 10 Most Significant Improvements for Speed and Quality on Bear

Please rank order the top 10 most significant improvements for speed and/or quality. I am interested to see if gemma versioning is an issue.

| Rank | Improvement | Speed Gain | Quality Gain | Notes |

|---|---|---|---|---|

| 1 | Use Qwen3-Coder 14B (Q5_K_M) | +10–15% | +15% (diff accuracy) | Best editor; 89% HumanEval, 75% BFCL |

| 2 | Enable Vulkan + Full GPU Offload | +30–50% | — | llama-cli --n-gpu-layers -1

|

| 3 | Reduce Context to 8K | +40% | Minor loss | Avoids KV cache bloat |

| 4 | Use Gemma 3 4B (Multimodal) | +60–80% | +5–10% | Faster but less trustworthy |

| 5 | Sequential Model Loading (Rules) | +20% | — | No VRAM swap; 1.8s max switch |

| 6 | nomic-embed-text + nRetrieve=10 | +25% | +20% | Faster, cleaner context |

| 7 | Gemma 2 9B (Executor Role) | +15% | +10% agency | 75% BFCL; fits fully |

| 8 | GLM-Z1-9B (Planner Only) | — | +15% logic | Strong <think> plans

|

| 9 | DeepSeek-Coder 6.7B (Tester) | +20% | +10% test gen | 90%+ HumanEval |

| 10 | Codestral 22B (Reviewer, Swap-In) | — | +12% bug catch | 86% review accuracy |

Gemma Versioning: Not an issue — Gemma 3 4B > Gemma 2 9B > Gemma 3 12B in speed/quality trade-off on Bear. Use Gemma 3 4B for fastest, Gemma 2 9B for balanced agency.

you said gemma versioning could be responsible for a 60-80% increase, but that is only for one part of the processing. how much of the total processing of a typical request would gemma actually do?

In a typical triad request (e.g., @codebase Refactor login.py), Gemma 2/3 (executor) handles ~25–35% of the total processing time.

| Phase | Model | Time Share | Tokens Processed |

|---|---|---|---|

| Planner | GLM-Z1-9B | 35–45% | 3K–8K (plan + context) |

| Editor | Qwen3-Coder | 30–40% | 1K–5K (diff) |

| Executor | Gemma | 25–35% | 500–2K (tool apply) |

Gemma’s 60–80% speed gain (e.g., Gemma 3 4B vs Gemma 2 9B) applies only to its phase, reducing total task time by ~15–25%, not 60–80%.

Example (10s baseline):

- Planner: 4s

- Editor: 3.5s

- Executor (Gemma 2): 2.5s → Gemma 3 4B: 1s → Total: 8.5s (15% faster)

Gemma is fast but brief — its role is short, tool-focused bursts.

Gemma 3 4B is a much smaller model than Gemma 2 9B. how do they compare in terms of accuracy and reliability

| Metric | Gemma 3 4B | Gemma 2 9B | Notes |

|---|---|---|---|

| HumanEval (Coding) | 78–82% | 82–86% | Gemma 2 edges out on complex logic. |

| LiveCodeBench | 65–68% | 68–72% | Gemma 2 better at real-world tasks. |

| BFCL (Tool Calling) | 70–75% | 75–80% | Gemma 2 more reliable in agency. |

| Hallucination Rate | 12–15% | 8–10% | Gemma 2 less prone to errors. |

| Consistency (Repeatability) | 88% | 94% | Gemma 2 outputs more stable. |

Summary:

- Gemma 3 4B: Faster, lighter, good enough for most agent tasks (executor/tester).

- Gemma 2 9B: More accurate and reliable — preferred for critical execution or review roles.

Use Gemma 3 4B for speed, Gemma 2 9B for trust.

In life, the ability to ask the right questions is an important asset.

Hub and Spoke

This article examines various hub and spoke architecture implementations. Consider that when contending with deployment issues, this has a single point of failure, so it is not a resiliant architecture.

All components might be completely contained within an enclosure, or they might be physically distributed across continents. Regardless, the above diagram abstracts the general concept.

Conclusion

Grok presented the information as more validated and practical than the evidence appears to support. The 10–30% speedup is unproven; the 5-model system is likely slower in practice than claimed due to swapping overhead. It will be interesting to verify timing on my hardware with realistic tasks.

Can a 5-model system run acceptably on the test PC (Bear)? Each additional LLM adds more overhead for model loading and unloading. At some point, the extra overhead of adding another LLM will reduce performance instead of improving it. I would like to understand the design and integration parameters better.

Add in just enough remote GPUs to stay snappy and accurate under heavy load, mix in local agency and virtual agency, and nirvana will surely arrive soon.

Knowledge and experience with the above is necessary to properly orchestrate and provision AI capability at a departmental level.

There’s gold in thum thar hills, bud.

Diggin’ it out ain’t usually the hardest part, though.

We are moving towards a distributed scheduler / dispatcher, which is of course the kernel of an operating system. What should we call it? Or have some already named it?

References

- Pooling CPU Memory for LLM Inference

- LLM VRAM Calculator for Self-Hosting

- Build a LOCAL AI Coding Assistant: Qwen3 + Ollama + Continue.dev

- AI Code Generation: Ollama, VSCode and Continue.dev

- Multi-Agent and Multi-LLM Architecture: Complete Guide for 2025

- LLM-Based Multi-Agent Systems for Software Engineering: Literature Review, Vision and the Road Ahead

- Understanding LLM-Based Agents and their Multi-Agent Architecture

- LLM Multi-Agent Systems

- Integrating Multiple AI Models in VSCode: Managing Prompt Routing and Responses

Did you know that all the computers on your LAN can pool their VRAM so your LLM models run across your entire LAN? That means a modest laptop, attached via a solid Wi-Fi connection could employ some extra local horsepower for AI tasks. If this type of topic interests you, please read the entire series.

- Claude Code Is Magnificent But Claude Desktop Is a Hot Mess

- Google Gemini Code Assist

- CodeGPT

- AI Planning vs. Waterfall Project Management

- Renting vs. Purchasing GPUs for LLMs Throughout Canada

- Best Local LLMs For Coding

- Running an LLM on the Windows Ollama app

- Early Draft: Multi-LLM Agent Pipelines

- MiniMax-M2 and Mini-Agent Review

- LLM Societies