Published 2024-01-23.

Last modified 2024-04-21.

Time to read: 2 minutes.

llm collection.

Whisper, from OpenAI, is a F/OSS Automatic Speech Recognition (ASR) system that recognizes speech and transcribes it to text. Compared to Siri, Alexa, and Google Assistant, Whisper understands fast-spoken, mumbling, or jargon-filled voice recordings very accurately.

While Whisper is not itself an LLM, it can serve as a data source for an LLM by transcribing speech into text. The transcribed text can then be fed into an LLM, which might generate an image from the text.

Online Access

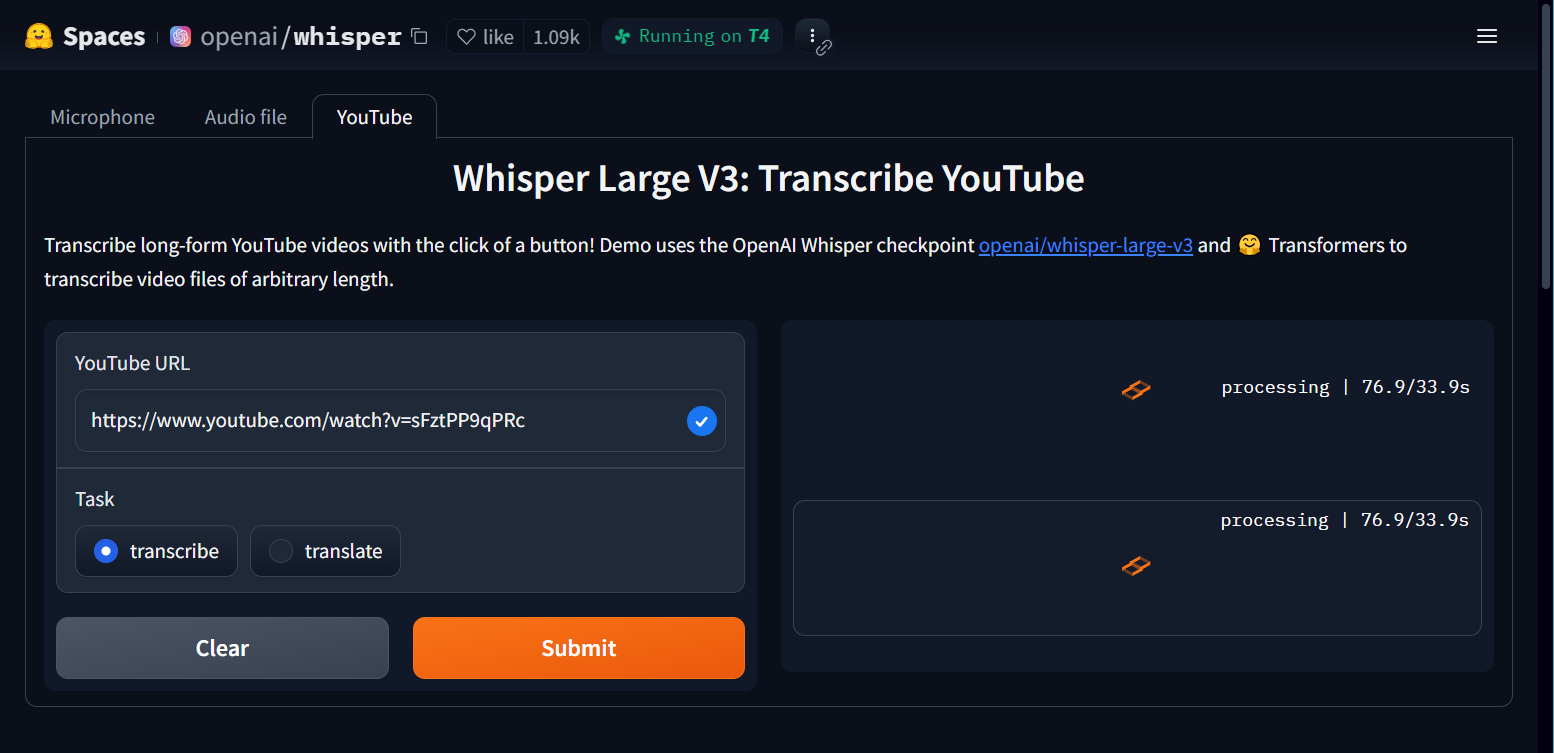

You can use Whisper online at no charge, without limitation,

on the openai/whisper space.

The Whisper webapp transcribes audio or video files of any length.

Data sources include the microphone of your computer or mobile device, audio files and YouTube videos.

Select the tab at the top of the webapp that corresponds to the type of data source that you would like to use.

BTW, you can upload a video into the Audio file tab, and it will work just fine.

The Python source code for the webapp is also provided for free.

Running Locally

You need Python on your computer in order to install whisper locally.

This process installs the whisper command and a Python library.

Install the current version like this:

$ pip install -U git+https://github.com/openai/whisper.git Collecting git+https://github.com/openai/whisper.git Cloning https://github.com/openai/whisper.git to /tmp/pip-req-build-srjtwic9 Running command git clone --filter=blob:none --quiet https://github.com/openai/whisper.git /tmp/pip-req-build-srjtwic9 Resolved https://github.com/openai/whisper.git to commit ba3f3cd54b0e5b8ce1ab3de13e32122d0d5f98ab Installing build dependencies ... done Getting requirements to build wheel ... done Preparing metadata (pyproject.toml) ... done Requirement already satisfied: numba in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (0.58.1) Requirement already satisfied: numpy in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (1.26.3) Requirement already satisfied: torch in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (2.1.2) Requirement already satisfied: tqdm in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (4.66.1) Requirement already satisfied: more-itertools in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (10.2.0) Requirement already satisfied: tiktoken in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (0.5.2) Requirement already satisfied: triton<3,>=2.0.0 in /home/mslinn/venv/default/lib/python3.11/site-packages (from openai-whisper==20231117) (2.1.0) Requirement already satisfied: filelock in /home/mslinn/venv/default/lib/python3.11/site-packages (from triton<3,>=2.0.0->openai-whisper==20231117) (3.13.1) Requirement already satisfied: llvmlite<0.42,>=0.41.0dev0 in /home/mslinn/venv/default/lib/python3.11/site-packages (from numba->openai-whisper==20231117) (0.41.1) Requirement already satisfied: regex>=2022.1.18 in /home/mslinn/venv/default/lib/python3.11/site-packages (from tiktoken->openai-whisper==20231117) (2023.12.25) Requirement already satisfied: requests>=2.26.0 in /home/mslinn/venv/default/lib/python3.11/site-packages (from tiktoken->openai-whisper==20231117) (2.31.0) Requirement already satisfied: typing-extensions in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (4.9.0) Requirement already satisfied: sympy in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (1.12) Requirement already satisfied: networkx in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (3.2.1) Requirement already satisfied: jinja2 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (3.1.3) Requirement already satisfied: fsspec in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (2023.12.2) Requirement already satisfied: nvidia-cuda-nvrtc-cu12==12.1.105 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (12.1.105) Requirement already satisfied: nvidia-cuda-runtime-cu12==12.1.105 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (12.1.105) Requirement already satisfied: nvidia-cuda-cupti-cu12==12.1.105 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (12.1.105) Requirement already satisfied: nvidia-cudnn-cu12==8.9.2.26 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (8.9.2.26) Requirement already satisfied: nvidia-cublas-cu12==12.1.3.1 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (12.1.3.1) Requirement already satisfied: nvidia-cufft-cu12==11.0.2.54 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (11.0.2.54) Requirement already satisfied: nvidia-curand-cu12==10.3.2.106 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (10.3.2.106) Requirement already satisfied: nvidia-cusolver-cu12==11.4.5.107 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (11.4.5.107) Requirement already satisfied: nvidia-cusparse-cu12==12.1.0.106 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (12.1.0.106) Requirement already satisfied: nvidia-nccl-cu12==2.18.1 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (2.18.1) Requirement already satisfied: nvidia-nvtx-cu12==12.1.105 in /home/mslinn/venv/default/lib/python3.11/site-packages (from torch->openai-whisper==20231117) (12.1.105) Requirement already satisfied: nvidia-nvjitlink-cu12 in /home/mslinn/venv/default/lib/python3.11/site-packages (from nvidia-cusolver-cu12==11.4.5.107->torch->openai-whisper==20231117) (12.3.101) Requirement already satisfied: charset-normalizer<4,>=2 in /home/mslinn/venv/default/lib/python3.11/site-packages (from requests>=2.26.0->tiktoken->openai-whisper==20231117) (3.3.2) Requirement already satisfied: idna<4,>=2.5 in /home/mslinn/venv/default/lib/python3.11/site-packages (from requests>=2.26.0->tiktoken->openai-whisper==20231117) (3.6) Requirement already satisfied: urllib3<3,>=1.21.1 in /home/mslinn/venv/default/lib/python3.11/site-packages (from requests>=2.26.0->tiktoken->openai-whisper==20231117) (2.1.0) Requirement already satisfied: certifi>=2017.4.17 in /home/mslinn/venv/default/lib/python3.11/site-packages (from requests>=2.26.0->tiktoken->openai-whisper==20231117) (2023.11.17) Requirement already satisfied: MarkupSafe>=2.0 in /home/mslinn/venv/default/lib/python3.11/site-packages (from jinja2->torch->openai-whisper==20231117) (2.1.4) Requirement already satisfied: mpmath>=0.19 in /home/mslinn/venv/default/lib/python3.11/site-packages (from sympy->torch->openai-whisper==20231117) (1.3.0)

Whisper Command

Here is the help information for the whisper command:

$ whisper -h usage: whisper [-h] [--model MODEL] [--model_dir MODEL_DIR] [--device DEVICE] [--output_dir OUTPUT_DIR] [--output_format {txt,vtt,srt,tsv,json,all}] [--verbose VERBOSE] [--task {transcribe,translate}] [--language {af,am,ar,as,az,ba,be,bg,bn,bo,br,bs,ca,cs,cy,da,de,el,en,es,et,eu,fa,fi,fo,fr,gl,gu,ha,haw,he,hi,hr,ht,hu,hy,id,is,it,ja,jw,ka,kk,km,kn,ko,la,lb,ln,lo,lt,lv,mg,mi,mk,ml,mn,mr,ms,mt,my,ne,nl,nn,no,oc,pa,pl,ps,pt,ro,ru,sa,sd,si,sk,sl,sn,so,sq,sr,su,sv,sw,ta,te,tg,th,tk,tl,tr,tt,uk,ur,uz,vi,yi,yo,yue,zh,Afrikaans,Albanian,Amharic,Arabic,Armenian,Assamese,Azerbaijani,Bashkir,Basque,Belarusian,Bengali,Bosnian,Breton,Bulgarian,Burmese,Cantonese,Castilian,Catalan,Chinese,Croatian,Czech,Danish,Dutch,English,Estonian,Faroese,Finnish,Flemish,French,Galician,Georgian,German,Greek,Gujarati,Haitian,Haitian Creole,Hausa,Hawaiian,Hebrew,Hindi,Hungarian,Icelandic,Indonesian,Italian,Japanese,Javanese,Kannada,Kazakh,Khmer,Korean,Lao,Latin,Latvian,Letzeburgesch,Lingala,Lithuanian,Luxembourgish,Macedonian,Malagasy,Malay,Malayalam,Maltese,Mandarin,Maori,Marathi,Moldavian,Moldovan,Mongolian,Myanmar,Nepali,Norwegian,Nynorsk,Occitan,Panjabi,Pashto,Persian,Polish,Portuguese,Punjabi,Pushto,Romanian,Russian,Sanskrit,Serbian,Shona,Sindhi,Sinhala,Sinhalese,Slovak,Slovenian,Somali,Spanish,Sundanese,Swahili,Swedish,Tagalog,Tajik,Tamil,Tatar,Telugu,Thai,Tibetan,Turkish,Turkmen,Ukrainian,Urdu,Uzbek,Valencian,Vietnamese,Welsh,Yiddish,Yoruba}] [--temperature TEMPERATURE] [--best_of BEST_OF] [--beam_size BEAM_SIZE] [--patience PATIENCE] [--length_penalty LENGTH_PENALTY] [--suppress_tokens SUPPRESS_TOKENS] [--initial_prompt INITIAL_PROMPT] [--condition_on_previous_text CONDITION_ON_PREVIOUS_TEXT] [--fp16 FP16] [--temperature_increment_on_fallback TEMPERATURE_INCREMENT_ON_FALLBACK] [--compression_ratio_threshold COMPRESSION_RATIO_THRESHOLD] [--logprob_threshold LOGPROB_THRESHOLD] [--no_speech_threshold NO_SPEECH_THRESHOLD] [--word_timestamps WORD_TIMESTAMPS] [--prepend_punctuations PREPEND_PUNCTUATIONS] [--append_punctuations APPEND_PUNCTUATIONS] [--highlight_words HIGHLIGHT_WORDS] [--max_line_width MAX_LINE_WIDTH] [--max_line_count MAX_LINE_COUNT] [--max_words_per_line MAX_WORDS_PER_LINE] [--threads THREADS] audio [audio ...]

positional arguments: audio audio file(s) to transcribe

options: -h, --help show this help message and exit --model MODEL name of the Whisper model to use (default: small) --model_dir MODEL_DIR the path to save model files; uses ~/.cache/whisper by default (default: None) --device DEVICE device to use for PyTorch inference (default: cuda) --output_dir OUTPUT_DIR, -o OUTPUT_DIR directory to save the outputs (default: .) --output_format {txt,vtt,srt,tsv,json,all}, -f {txt,vtt,srt,tsv,json,all} format of the output file; if not specified, all available formats will be produced (default: all) --verbose VERBOSE whether to print out the progress and debug messages (default: True) --task {transcribe,translate} whether to perform X->X speech recognition ('transcribe') or X->English translation ('translate') (default: transcribe) --language {af,am,ar,as,az,ba,be,bg,bn,bo,br,bs,ca,cs,cy,da,de,el,en,es,et,eu,fa,fi,fo,fr,gl,gu,ha,haw,he,hi,hr,ht,hu,hy,id,is,it,ja,jw,ka,kk,km,kn,ko,la,lb,ln,lo,lt,lv,mg,mi,mk,ml,mn,mr,ms,mt,my,ne,nl,nn,no,oc,pa,pl,ps,pt,ro,ru,sa,sd,si,sk,sl,sn,so,sq,sr,su,sv,sw,ta,te,tg,th,tk,tl,tr,tt,uk,ur,uz,vi,yi,yo,yue,zh,Afrikaans,Albanian,Amharic,Arabic,Armenian,Assamese,Azerbaijani,Bashkir,Basque,Belarusian,Bengali,Bosnian,Breton,Bulgarian,Burmese,Cantonese,Castilian,Catalan,Chinese,Croatian,Czech,Danish,Dutch,English,Estonian,Faroese,Finnish,Flemish,French,Galician,Georgian,German,Greek,Gujarati,Haitian,Haitian Creole,Hausa,Hawaiian,Hebrew,Hindi,Hungarian,Icelandic,Indonesian,Italian,Japanese,Javanese,Kannada,Kazakh,Khmer,Korean,Lao,Latin,Latvian,Letzeburgesch,Lingala,Lithuanian,Luxembourgish,Macedonian,Malagasy,Malay,Malayalam,Maltese,Mandarin,Maori,Marathi,Moldavian,Moldovan,Mongolian,Myanmar,Nepali,Norwegian,Nynorsk,Occitan,Panjabi,Pashto,Persian,Polish,Portuguese,Punjabi,Pushto,Romanian,Russian,Sanskrit,Serbian,Shona,Sindhi,Sinhala,Sinhalese,Slovak,Slovenian,Somali,Spanish,Sundanese,Swahili,Swedish,Tagalog,Tajik,Tamil,Tatar,Telugu,Thai,Tibetan,Turkish,Turkmen,Ukrainian,Urdu,Uzbek,Valencian,Vietnamese,Welsh,Yiddish,Yoruba} language spoken in the audio, specify None to perform language detection (default: None) --temperature TEMPERATURE temperature to use for sampling (default: 0) --best_of BEST_OF number of candidates when sampling with non-zero temperature (default: 5) --beam_size BEAM_SIZE number of beams in beam search, only applicable when temperature is zero (default: 5) --patience PATIENCE optional patience value to use in beam decoding, as in https://arxiv.org/abs/2204.05424, the default (1.0) is equivalent to conventional beam search (default: None) --length_penalty LENGTH_PENALTY optional token length penalty coefficient (alpha) as in https://arxiv.org/abs/1609.08144, uses simple length normalization by default (default: None) --suppress_tokens SUPPRESS_TOKENS comma-separated list of token ids to suppress during sampling; '-1' will suppress most special characters except common punctuations (default: -1) --initial_prompt INITIAL_PROMPT optional text to provide as a prompt for the first window. (default: None) --condition_on_previous_text CONDITION_ON_PREVIOUS_TEXT if True, provide the previous output of the model as a prompt for the next window; disabling may make the text inconsistent across windows, but the model becomes less prone to getting stuck in a failure loop (default: True) --fp16 FP16 whether to perform inference in fp16; True by default (default: True) --temperature_increment_on_fallback TEMPERATURE_INCREMENT_ON_FALLBACK temperature to increase when falling back when the decoding fails to meet either of the thresholds below (default: 0.2) --compression_ratio_threshold COMPRESSION_RATIO_THRESHOLD if the gzip compression ratio is higher than this value, treat the decoding as failed (default: 2.4) --logprob_threshold LOGPROB_THRESHOLD if the average log probability is lower than this value, treat the decoding as failed (default: -1.0) --no_speech_threshold NO_SPEECH_THRESHOLD if the probability of the <|nospeech|> token is higher than this value AND the decoding has failed due to `logprob_threshold`, consider the segment as silence (default: 0.6) --word_timestamps WORD_TIMESTAMPS (experimental) extract word-level timestamps and refine the results based on them (default: False) --prepend_punctuations PREPEND_PUNCTUATIONS if word_timestamps is True, merge these punctuation symbols with the next word (default: "&apos“¿([{-)) --append_punctuations APPEND_PUNCTUATIONS if word_timestamps is True, merge these punctuation symbols with the previous word (default: "'.。,,!!??::”)]}、) --highlight_words HIGHLIGHT_WORDS (requires --word_timestamps True) underline each word as it is spoken in srt and vtt (default: False) --max_line_width MAX_LINE_WIDTH (requires --word_timestamps True) the maximum number of characters in a line before breaking the line (default: None) --max_line_count MAX_LINE_COUNT (requires --word_timestamps True) the maximum number of lines in a segment (default: None) --max_words_per_line MAX_WORDS_PER_LINE (requires --word_timestamps True, no effect with --max_line_width) the maximum number of words in a segment (default: None) --threads THREADS number of threads used by torch for CPU inference; supercedes MKL_NUM_THREADS/OMP_NUM_THREADS (default: 0)

Here is a little Bash script I wrote to transcribe media files using the whisper command:

#!/bin/bash

dl -V . "$1"

for X in *.mp4; do

if [ ! -f "$X.txt" ]; then

python transcribe.py "$X" > "$X.txt"

fi

done

Lets try the transcribe script on a video file called x.mp4:

$ transcribe x.mp4

Simple Python Program

Here is a simple Python program that uses Whisper. It converts every period followed by a space into a period followed by a newline, for readability.

#!/usr/bin/env python

import os

import sys

import whisper

def help(msg=False):

if msg:

print(msg)

cmd = os.path.basename(sys.argv[0])

print(f'''\

{cmd} - convert audio and video files to text

Usage: {cmd} FILE

''')

exit(1)

if len(sys.argv)==1: help()

file = sys.argv[1]

# tiny, base, small, medium and large.

model = whisper.load_model("base")

result = model.transcribe(file)["text"].replace(". ", ".\n")

print(result)

Lets try the transcribe.py program on a video file called x.mp4:

$ python transcribe.py x.mp4

If you make the script executable, you do not have to type the word python:

$ chmod a+x transcribe.py

$ transcribe.py x.mp4

OpenVino

Whisper powers the OpenVino transcription plugin for Audacity.