Published 2024-01-16.

Time to read: 2 minutes.

llm collection.

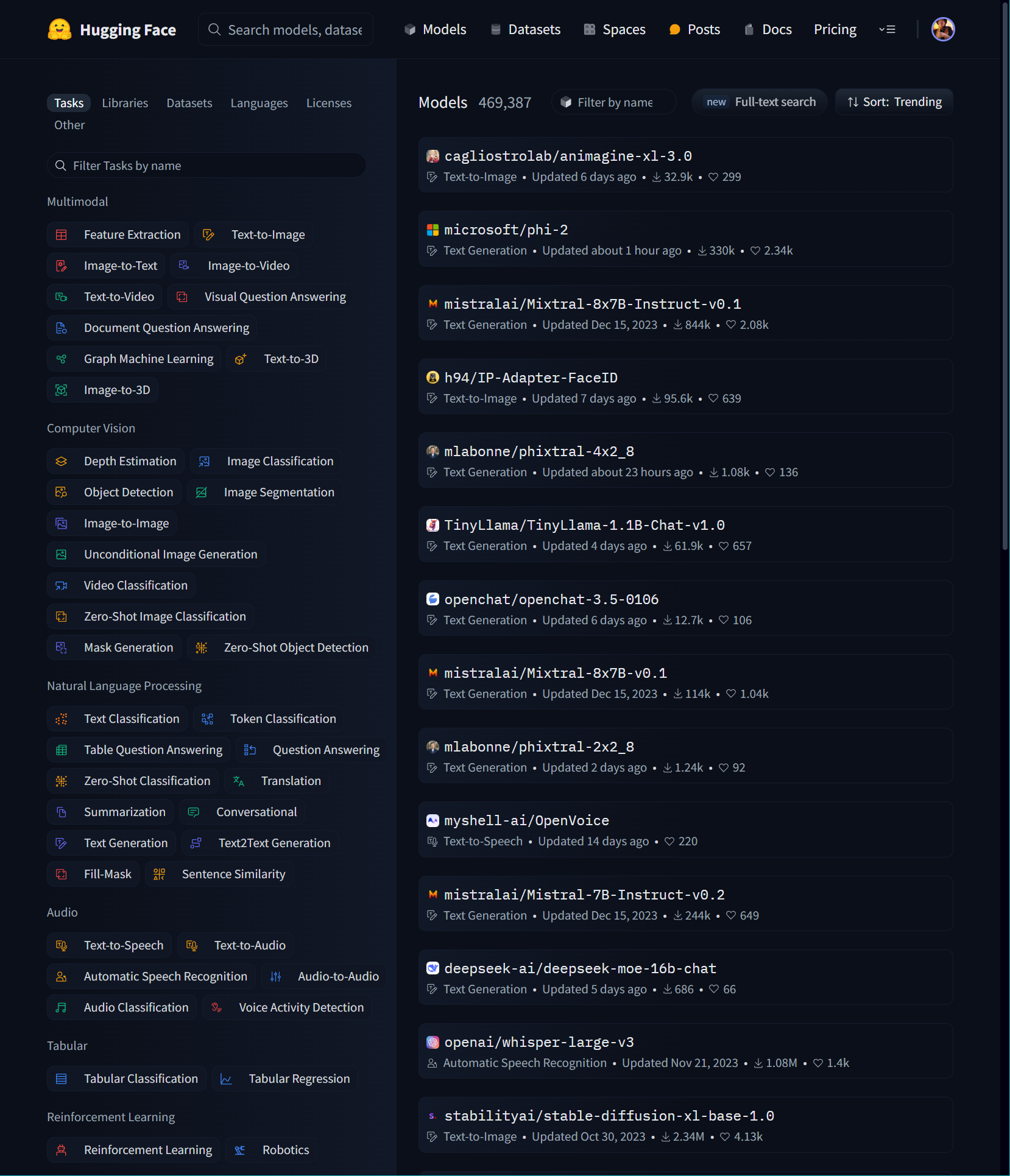

The Hugging Face Hub is an extensive resource for sharing machine learning (ML) models, documentation, demonstrations, datasets, and metrics. It has a customized version of Git, specially adapted for large repositories that contain ML models. The feature set for the free tier is remarkably capable. Non-free pricing is quite modest, and your private models can be run economically on Inference Endpoints - at least until you get to NVIDIA A100 servers with more than 2 GPUs.

Hugging Face provides a Python library, which makes sense because the machine learning community uses Python more than any other computer language. The Python library includes a command-line interface (CLI) for working with the resources that Hugging Face provides.

$ pip install --upgrade huggingface_hub

The Quickstart has more information.

$ huggingface-cli usage: huggingface-cli <command> [<args>]

positional arguments: {env,login,whoami,logout,repo,upload,download,lfs-enable-largefiles,lfs-multipart-upload,scan-cache,delete-cache} huggingface-cli command helpers env Print information about the environment. login Log in using a token from huggingface.co/settings/tokens whoami Find out which huggingface.co account you are logged in as. logout Log out repo {create} Commands to interact with your huggingface.co repos. upload Upload a file or a folder to a repo on the Hub download Download files from the Hub lfs-enable-largefiles Configure your repository to enable upload of files > 5GB. scan-cache Scan cache directory. delete-cache Delete revisions from the cache directory.

options: -h, --help show this help message and exit

You will need a user token for many operations. User tokens identify each user uniquely.

$ huggingface-cli login huggingface-cli login _| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _| _|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_| _| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _| _| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_| To login, `huggingface_hub` requires a token generated from https://huggingface.co/settings/tokens . Token:

It is easy to get a user token.

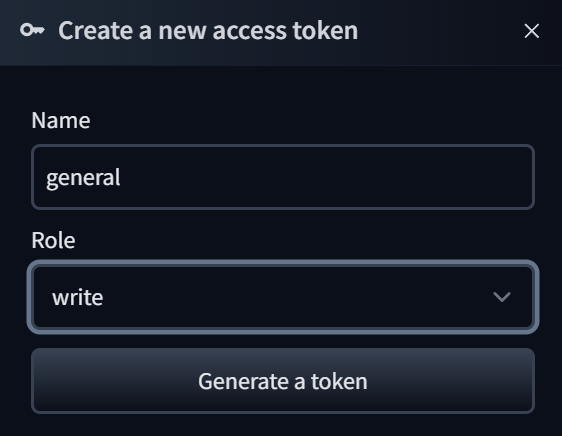

I clicked on the above URL (huggingface.co/) and was taken to a web page where I named the user token.

I then chose between read and write permissions.

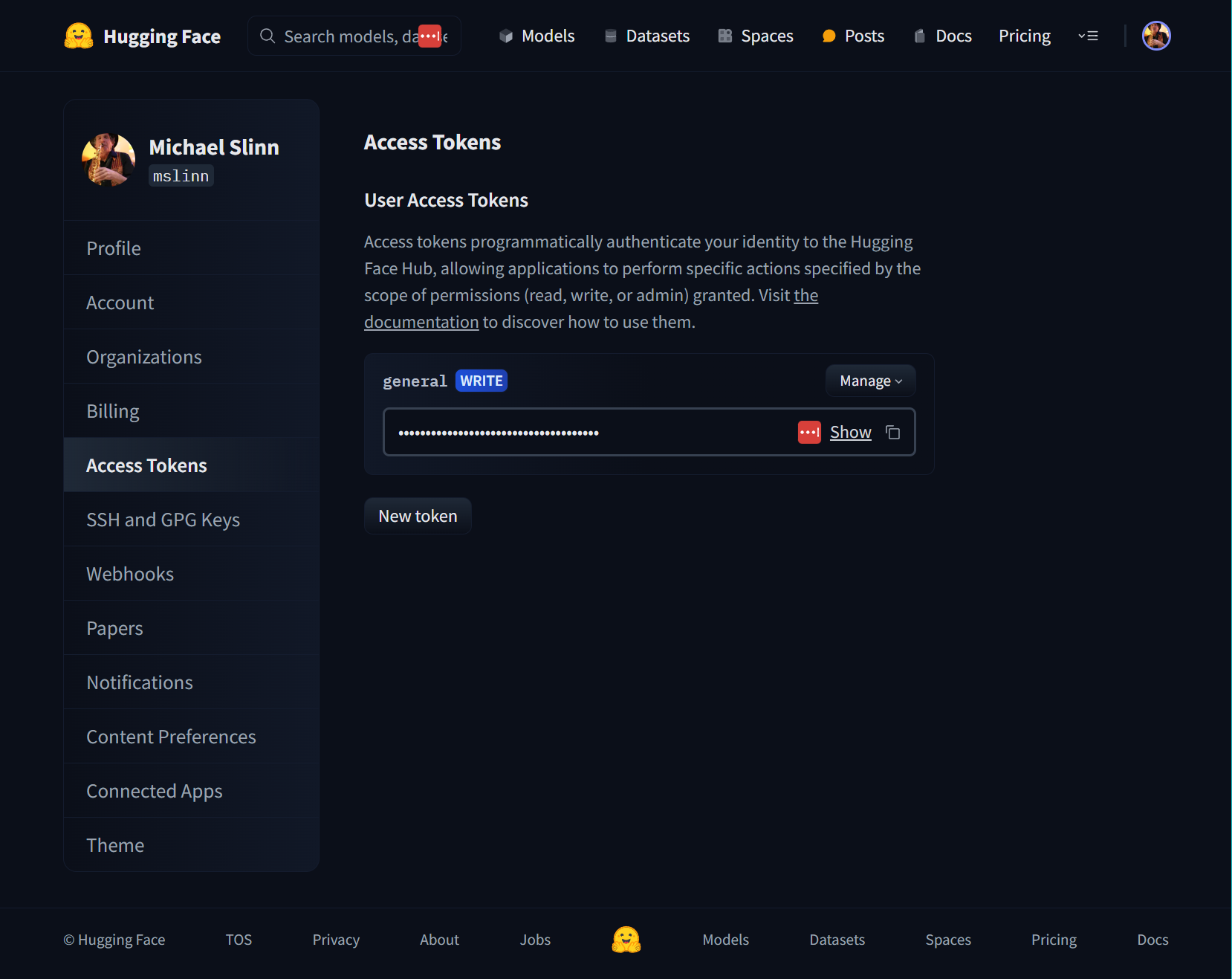

I clicked on the copy-to-clipboard icon, which placed the new user token on my system clipboard:

Then I pasted the user token from the clipboard to the command line shown previously. Now I was prompted for permission to use the user token as Git credentials.

Add token as git credential? (Y/n)

Token is valid (permission: write).

Cannot authenticate through git-credential as no helper is defined on your

machine. You might have to re-authenticate when pushing to the Hugging Face

Hub. Run the following command in your terminal in case you want to set the

'store' credential helper as default.

git config --global credential.helper store

Read https://git-scm.com/book/en/v2/Git-Tools-Credential-Storage for more

details. Token has not been saved to git credential helper.

Your token has been saved to /home/mslinn/.cache/huggingface/token

Login successful

It is a good idea to automatically provide the user token to Git when required:

$ git config --global credential.helper store

Git Repositories

Hugging Face hosts Git repositories for machine learning.

Models

No model today is good at everything. Instead, models specialize on various tasks. That is why Hugging Face has over 325,000 models at the tiem of writing. Hugging Face categorizes them so you can try out various models for your needs.