Published 2017-01-10.

Time to read: 6 minutes.

Amazon’s Alexa is a runaway success. Already, 5% of US households have a hands-free voice operated device. 50% of Echo owners keep one in the kitchen. Gartner predicts that by 2018, 30% of our interactions with technology will be via voice conversations. The near future will have many hands-free, voice-driven, Internet-aware applications, and some will also provide a web interface in order to satisfy use cases that require a text or graphic display.

The Near Future

The near future will have many hands-free, voice-driven, Internet-aware applications, and some will also provide a web interface to satisfy use cases that require a text or graphic display. Potential usages include hands-free voice control of applications running on computers, tablets and phones, hands-free voice control of home devices and vehicles, dictaphones, and games that provide anthropomorphic characters with the ability to generate and understand speech.

Services that developers and integrators can use for hands-free, voice-operated applications include Amazon’s Alexa, Google Assistant, Google Voice, Apple’s Siri, and Microsoft Cortana.

The Present

Only recently has it become feasible to use hands-free voice as a user interface. The best hands-free, voice applications are innately distributed – that is, they perform some computation on a local device, and they also require a connection to a server to do the heavy computation necessary for a high-quality experience. Most companies developing applications that use hands-free voice as a user interface require and will continue to require services provided by third parties. However, from the point of view of the application developer's company, the data shared with these third parties can represent a significant security risk. From the user's point of view, this data can represent a potentially significant privacy breach. I'm going to discuss why this is so in this article, and what can be done about it.

Privacy and security concerns for synthesizing speech are minimal because quality speech can be synthesized without context specific to an individual. This means that user data need not be associated with the words or phrases being synthesized, so anonymous phrases can be and should be sent to the remote service that generates the audio files produced by the speech synthesis.

The best value for high-quality voice generation is achieved with a distributed solution.

In this scenario, voice generation is simple to initiate:

a text string is sent to a remote service, and an audio clip containing synthesized speech is returned.

Most voice generators support embedded markup to control inflection.

For example, Amazon Polly and

Google Assistant (formerly api.ai) both use

SSML;

Apple uses a variety of techniques across its products,

and Microsoft uses SAPI.

In contrast, the heavy lifting necessary to recognize unconstrained vocabulary requires lots of compute resource, and raises privacy and ethical issues. The main issues are:

- Recognizing a trigger word or phrase

- Training a voice recognition engine

- Determining the appropriate privacy/effectiveness trade-off for your application

- Integrating with third-party or proprietary services

What Are These Things Made From?

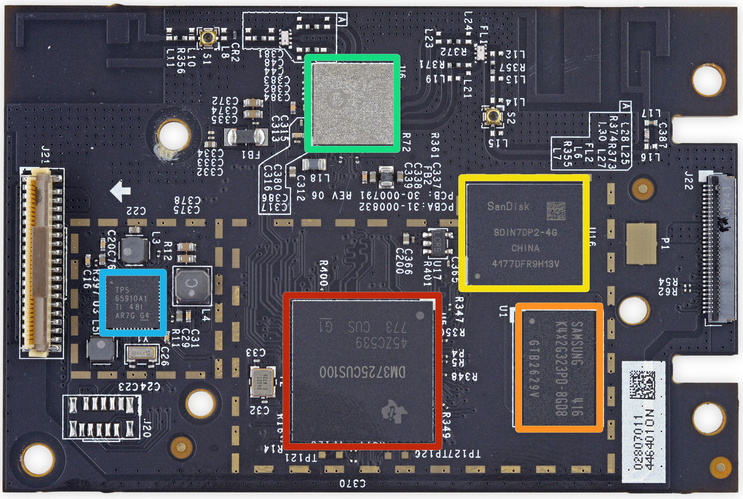

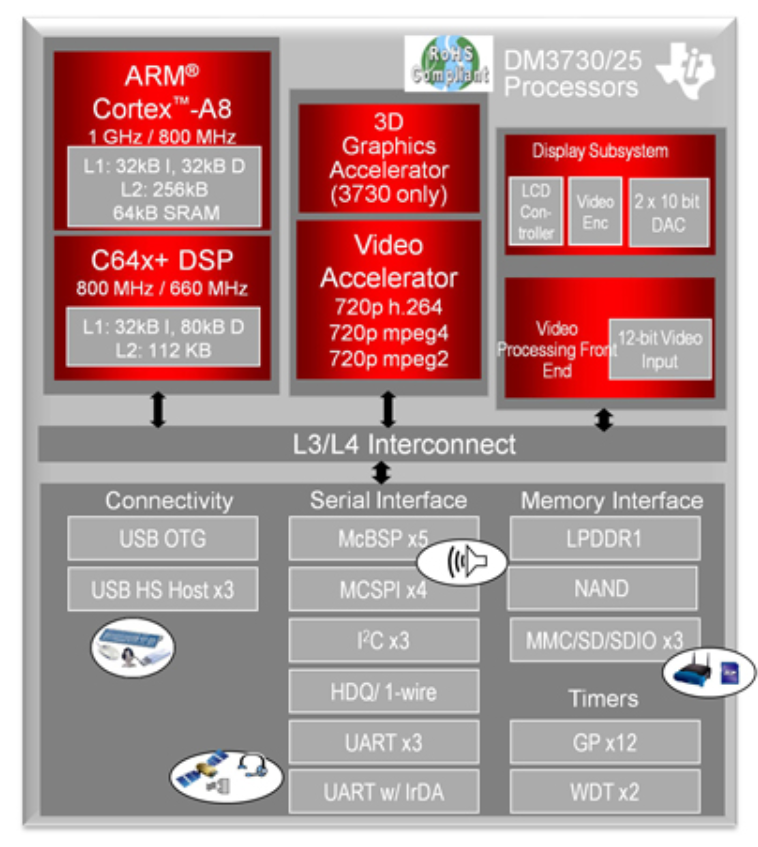

Hands-free voice operated devices have most of the same components as a tablet, but often do not have a screen. An ARM A8 to A11 processor is typically embedded in a chip die that also contains a powerful digital signal processor. In other words, they can do a lot of computing; they are more powerful than any mobile phone, and they are more powerful than most tablets.

The open source ecosystem that has grown up around these DSP/CPU chips includes a variety of operating systems, including real-time and various Linux distributions, and many applications. The OSes are: Android, DSP/BIOS, Neutrino, Integrity, Windows Embedded CE, Linux, and VxWorks. It is quick and easy to design a complete device similar to Alexa and bring it to market.

Recognizing a trigger word or phrase

One of the first major challenges one encounters when designing a device that responds to voice, is how to ignore silence, or recognizing noise that should be ignored. This requires some level of signal processing. In contrast, requiring the user to push a button makes for a much simpler user interface, but this requirement would greatly restrict the types of applications possible.

mobile transceiver

I am a ham radio operator, and I use the well-known protocol for addressing a specific individual when using a broadcast medium. If I want to talk to another ham operator, I address them by their call sign three times and await their acknowledgement before giving my message. “KG6LBG, KG6LBG, KG6LBG, this is KG6LDE, do you read me?” If that person hears their call sign, they reply “KG6LDE, this is KG6LBG, go ahead.” “KG6LBG, I just called to say hello, over.” “KG6LDE, Hello yourself, over and out.”

Hands-free voice applications also need to recognize a trigger word or phrase (also known as a hotword) that prefaces an audio stream which will be processed for voice recognition. Without such a trigger, either the user would need to press a button to start recording their speech, or a continuous audio stream would have to be processed. I'm interested in hands-free voice recognition. Since the voice recognition processing must be done on a remote service, a lot of bandwidth and CPU power would be wasted processing silence or irrelevant sound. Because it is undesirable to a continuous audio stream to a server to accurately recognize trigger words, the methods used to recognize them are less accurate.

Proprietary Alternatives

Both of these products can be configured to perform the trigger word recognition without requiring any bandwidth between the CPU running the recognition program and a server. Neither of them provide a JavaScript implementation, which means that unless the trigger word recognition program in installed on the local machine, it must be installed on a connected server and bandwidth will be used at all times.

-

Sensory, Inc's TrulyHandsfree library (license), based in Santa Clara, CA. “We do not have any low cost or free license or library. We are unable to support any student or individual.”

-

kitt.ai's Snowboy, based in Seattle, WA and partially funded by Amazon.

CMU Sphinx

Designed for low-resource platforms, implementations of CMU’s Sphinx exist for C (which supports Python) and Java. Hotword spotting is supported. Several versions of Sphinx exist, with varying free and commercial licenses. Reports suggest that Sphinx works reasonably well but I have not tested yet. Sphinx powers Jasper.

JavaScript alternatives

- Annyang works well, but requires Google's web browsers and servers, so it is probably not reasonable to use it just for hotword detection.

-

Voice-commands.jsalso requires Google's web browsers and servers. - Sonus is a Node framework which will be able to be configured to use a variety of back ends one day. It does hotword detection by using Snowboy; the authors obviously ignored Snowboy's license terms.

- JuliusJS is a JavaScript port of the “Large Vocabulary Continuous Speech Recognition Engine Julius”. It does not call any servers and runs in most browsers. Recognition is weak and it requires a lot of CPU.

- PocketSphinx.js is a free browser-based alternative, unfortunately it is horrible.

Training a voice recognition engine

Voice recognition engines need to be trained on a large dataset for the desired languages. Recognition effectiveness is less than linearly proportional to the size of the dataset, and high-quality datasets are important. Truly large amounts of data are required. Amazon, Apple, Google and Microsoft have commercial products that were trained using enormous proprietary datasets. This is a substantial investment, so only well-capitalized organizations will be able to offer their own voice recognition engines for unconstrained vocabularies.

Determining Your Application's Privacy / Effectiveness Tradeoff

A voice recognition's effectiveness increases for specific users if their voice streams are recorded and stored, then used for further training. However, this means that privacy and security are traded off for effectiveness. This service provider's tradeoff might not be optimal for your use case. Apple's Siri only associates the stored voice recordings with you for 6 months. Google's Assist and Voice, and Amazon Alexa store all your voice recordings forever, unless you explicitly delete them. I could not discover how long Microsoft's Cortana and Skype store voice recordings, or how to delete them.

Integrating With Third-party or Proprietary Services

Because voice recognition returns a structured document like JSON or XML, and voice generation is simple to initiate, integration is well understood and many options exist.